In my previous blog I have covered most popular classification algorithm K-Nearest Neighbors.

Today we will look into another popular classification algorithm Naive Bayes.

Naive — Means we are making a naive assumption on features, Each feature is independent on each other.

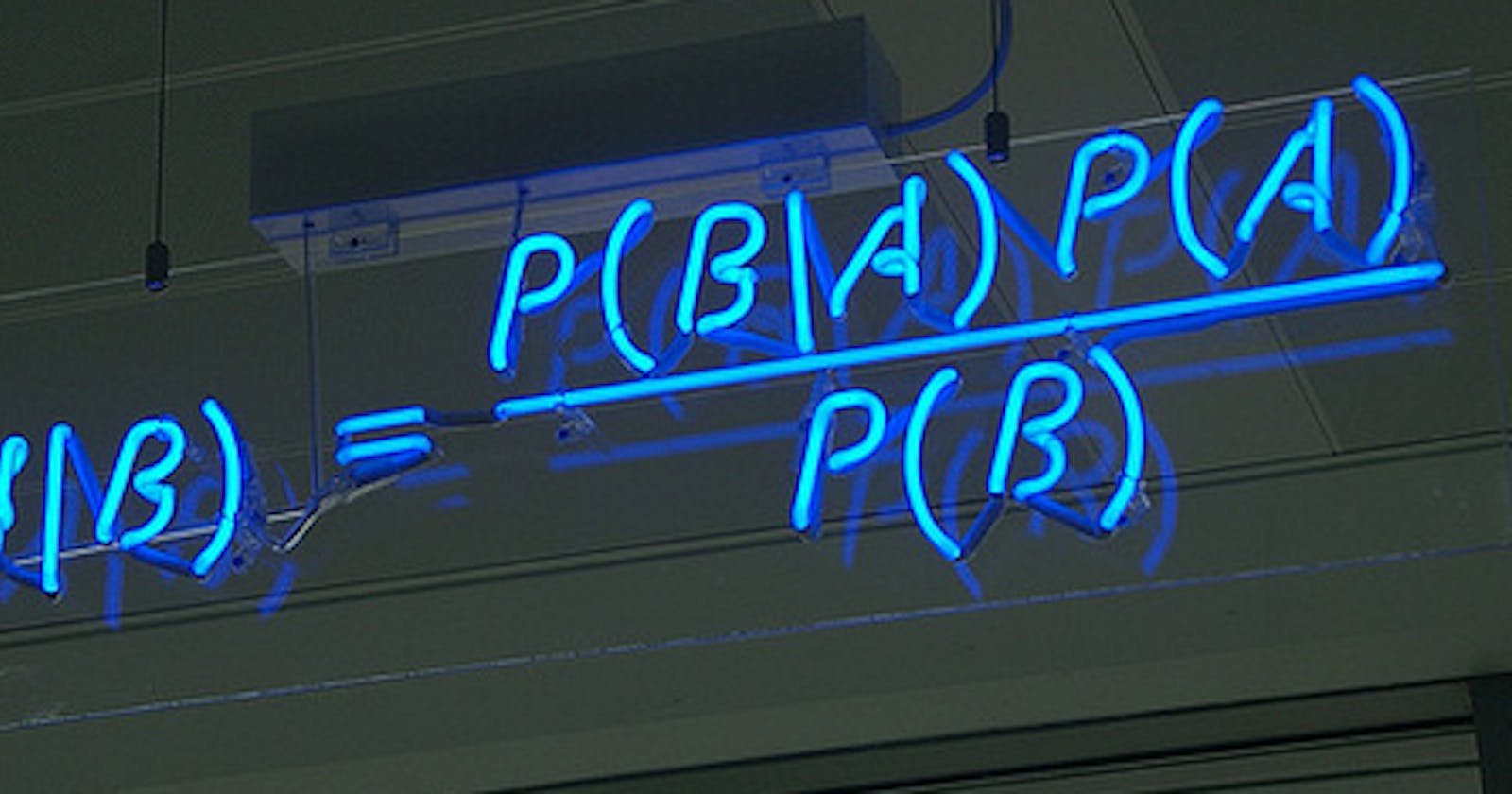

Bayes — refers to the author name Thomas Bayes.

Naive Bayes is considered as benchmark for text classification.

Breaking down Naive Bayes:

Brief explanation of Naive Bayes:

Consider this dataset:

Class variable of the dataset is Play feature, where outcomes are Yes (or) No.

We have features Outlook, Temperature, Humidty and Wind.

Features and their corresponding categorical values:

P(Outlook=o|ClassPlay=b), where o ∈[Sunny, Overcast, Rainy] and b ∈ [yes, no]

P(Temperature=t|ClassPlay=b), where t ∈[Hot, Mild, Cool] and b∈ [yes, no],

P(Humidity=h|ClassPlay=b), where h∈ [High, Norma] and b∈ [yes, no],

P(Wind=w|ClassPlay=b), where w ∈ [Weak, Strong] and b ∈ [yes, no].

Computing Frequency table & probability for each feature with class feature:

We can also calculate frequency table for Class vs Class variable:

Let’s say, we get a new instance of the weather condition, x’=(Outlook=Sunny, Temperature=Cool, Humidity=High, Wind=Strong) that will have to be classified (i.e., are we going to play tennis under the conditions specified by x’).

P(ClassPlay=Yes|x’) = [P(Sunny|ClassPlay=Yes) × P(Cool|ClassPlay=Yes) ×

P(High|ClassPlay=Yes) × P(Strong|ClassPlay=Yes)] ×

P(ClassPlay=Yes)

= 2/9 × 3/9 × 3/9 × 3/9 × 9/14 = 0.0053

P(ClassPlay=No|x’) = [P(Sunny|ClassPlay=No) ×P(Cool|ClassPlay=No) ×

P(High|ClassPlay=No) × P(Strong|ClassPlay=No)] ×

P(ClassPlay=No)

= 3/5 × 1/5 × 4/5 × 3/5 × 5/14 = 0.0205

Since P(ClassPlay=Yes|x’) less than P(ClassPlay=No|x’), we classify the new instance x’ to be “No”.

For every feature category similar table is calculated and stored in dictionary.

Complexity:

Time complexity — O(ndc)

Space complexity — O(dc)

Test time complexity — O(dc)

Naive Bayes is much better in the time of space complexity and runtime time complexity.

Mostly used in text classification, Spam-filter, Positive/Negative.

Naive Bayes on Text Dataset :

After performing NLP we would have d-number of unique words in our list, where each is considered to be a feature.

W = [W1, W2, …..Wd] are the list of features in Dataset after NLP.

Then,

Considering probability of a word in train data:

let Wi =’Vancouver’ be the random word.

How to calculate probability of a word ‘Vancouver’ in Train Data which is present in test dataset but not in train dataset:

Missing word:

If its not present in train dataset, we wont be able to find the frequency table nor probability of the ‘Vancouver’ corresponding to class in memory for computing Probability for testing the model:

Probability turns out to be 0, as # of times ‘Vancouver’ present in train dataset is 0.

To overcome this problem we do laplace smoothing:

We add α and K to our prob term for each word in train and test dataset.

K= # of unique values Wi can take, in otherwords for text its 0 or 1. If its a word, binary count is considered and if its a feature with numbers all the unique values in the features are consider.

α — can be any number, typically its 1, its called add-one smoothing.

α Behaviour:

As α increases, we are trying to move likelihood probability to uniform distribution.

we apply laplace transform to make it stable.

α is the only hyperparameter in Naive Bayes.

α determines the model is overfit ot underfit.

Log Probability for numerical stability:

P( Y=1 | W) = P(Y) P(W1 | Y=1) ……P(Wd | Y=1)

= 0.2 0.4 ……. 0.5

=> When we multiply 100s of numbers in decimal points we undergo numerical stability and numerical underflow. If the decimal points are more than 16 digits, Python stars to do rounding.

Solution: We take log on both sides

Bais and Variance Tradeoff:

How α affecting the model and Bais Variance Trafeoff

Case 1: α = 0

Say if there are rare words in our Train-Dataset, like civilisation, Psychology etc. The frequency of those words is very less, might be 2 or 3 max.

Say if we remove those rarely used words from our model, this causes more change in our model => high variance => over fitting.

This leads to overfitting as we are considering even rarely repeated words for training model.

Case α =10000 (very large):

So here determining factor boils down to the term P(Y= 1 or 0)

Whichever class has more count, query point Xi belongs to that respective class. Like similar thing happened in KNN.

Feature Importance & Interpretability for NB:

Feature importance can be attained using model itself.

As we have probability score of livelihood term, P (Wi | Y= 1) or P (Wi | Y= 0), Sort the values in descending order, top values are considered as important features/ words.

We could say that words with high livelihood probability are the important words for determining +ve class label. Similarly for the words in -ve class label.

Handling Imbalanced Dataset:

Up sampling or Down Sampling, they make the prior term 1/2.

Instead of making prior term 1/2 we can just remove the term itself as both means the same. ( this is mostly used)

NB theorem can be modified for unbalanced dataset but this is not often used.

Handling outliers:

Ignore words which occurs less than n-times.

Laplace Smoothing will take care of outliers both in train and test dataset.

α -> must be reasonable. As α have more effect on terms with less numerator.

Happy Learning!

Understanding K-NN Algorithm.

Feel free to connect: